In June 1952, UNIVAC entered service at the Pentagon as the first U.S. commercially built digital computer. It weighed over 13 tons and consumed 125KW of power. Its central memory (RAM) stored only 12,000 characters, the equivalent of about 12 kilobytes (kB).

60 years later, the Apple iPAD weighs 650g (1.4lbs), uses 24W power and has a RAM of 1 milion Bytes (1GB). What amazing progress! 20,000 times lighter, 100 times more powerful, using 600 times less electrical power .

In a patent suit between Honeywell and Sperry Rand, the judge ruled in 1973 that the Atanasoff-Berry Computer, constructed in 1939-1942 at the Iowa State University in Ames, Iowa, provided the basic ideas for an electronic digital computer, although it only solved systems of linear equations. However it did incorporate a primitive digital random access memory (DRAM), used binary digits, performed all calculations using electronics rather than mechanical devices, used

a system in which computation and memory are separated. It was not patented and was described in a paper.

In a patent suit between Honeywell and Sperry Rand, the judge ruled in 1973 that the Atanasoff-Berry Computer, constructed in 1939-1942 at the Iowa State University in Ames, Iowa, provided the basic ideas for an electronic digital computer, although it only solved systems of linear equations. However it did incorporate a primitive digital random access memory (DRAM), used binary digits, performed all calculations using electronics rather than mechanical devices, used

a system in which computation and memory are separated. It was not patented and was described in a paper.

In 1941, in Germany, Konrad Zuse created Z3, the first fully-automated, program-controlled, and freely-programmable computer for binary floating-point calculations. It used telephone relays and punched 35mm film for input. He built a working model and obtained a patent but did not receive support from the Nazi government for further developmemt.

The next electronic digital computer was Collosus, built in 1943 at Bletchley Park, England, under direction of Tommy Flowers. It was the first electronic digital machine with programmability. Mark I, completed in February 1944, used over 1500 tubes; Mark II 2400 tubes. However

it had no internally stored programs or memory, and it was not a general-purpose machine, being designed solely for decoding German encrypted messages, involving counting and Boolean operations.

The brilliant mathematician

John von Neumann, at Princeton University, wrote a paper in 1945, which established the basic architecture of all future digital computers, he is remembered as the "Father of digital computers".

At the University of Pennsylvania, Dr.John Mauchly and engineer J. P. Eckert, Jr at the University of Pennsylvania designed and built ENIAC for the U.S.Army Ordnance Department. This was the first general purpose programmable computer.

ENIAC () consisted of thirty separate units, and weighed over thirty tons with 19,000 vacuum tubes. It was completed February 15, 1946. After building ENIAC, Eckert and Mauchly formed their own company to build for U.S.Army Ordnance Department the improved EDVAC, which used an internal binary memory of about 5.5 kiloBytes (kB).

Eckert-Mauchly Computer Corporation received a contract from the U.S. Bureau of Census and started the development of the

UNIVAC I, However they ran into financial difficulties and in 1950 were acquired by Remington Rand, as its Univac Div. The first unit was actually completed March 31 1951, but was not shipped to the customer until a year later. The second unit was delivered and placed in service at the Pentagon in June 1952. The next four units were supplied to U.S.Government agencies. In 1954 the first unit was supplied to a private company - to the Appliance Div. of General Electric in Louisville for inventory control and payroll functions. Each unit cost over $1,000,000!

Eckert-Mauchly Computer Corporation received a contract from the U.S. Bureau of Census and started the development of the

UNIVAC I, However they ran into financial difficulties and in 1950 were acquired by Remington Rand, as its Univac Div. The first unit was actually completed March 31 1951, but was not shipped to the customer until a year later. The second unit was delivered and placed in service at the Pentagon in June 1952. The next four units were supplied to U.S.Government agencies. In 1954 the first unit was supplied to a private company - to the Appliance Div. of General Electric in Louisville for inventory control and payroll functions. Each unit cost over $1,000,000!

UNIVAC I used 5,200 vacuum tubes. Main data inut/output and storage were in up to 10 tape drives, each 1200 ft tape contained up to 100 kiloBytes (kB) of data at 128 bits/inch, with a maximum transfer rate of 7,200 characters per second..

(NOTE: A present day 2 inch thumbdrive contains up to 9 GigaBytes!)

The title of the world's first commercial digital computer goes to England. Maurice Wilkes and his team at the University of Cambridge Mathematical Laboratory, having read von Neumann's paper and watched the operation of Eniac, developed EDSAC. It was the first complete and fully operational regular stored-program electronic digital computer. EDSAC ran its first programs on 6 May 1949, when it calculated a table of squares and a list of prime numbers. Later the project was supported by J. Lyons & Co. Ltd., a British bakery and restaurant company, who were the first commercial company in the world to actually finance the development of a computer. LEO 1 , based on the EDSAC design, completed its first task on 5 September 1951, when an application known as Bakeries Valuations was performed. The further development and building of LEO was taken over by English Electric Company, later International Computers Ltd (ICL).

.February 1, 1951. IBM at Poughkeepsie,NY, having received a number of orders from defense contractors, based on a hypothetical specification, IBM started the design of its first model 701 scientific/engineering computer. On March 27, 1953, the first working unit was introduced at IBM New York City headquarters. Very quickly IBM became dominant in the computer industry, introducing in rapid sequence faster, simpler and less costly models, 1969 saw the start of the most succesful IBM 360 family, already fully transistorized and with solid state RAM.

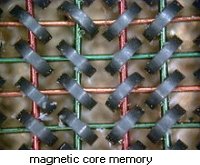

The invention of the transistor by Bardeen, Brattain and Schockley at Bell Labs 1n 1948 revolutionized the computer industry. The bulk and huge power consumption of vacuum tubes (as well as the heat generated by them) gave way to small cabinets. The first totally solid state computers with RAM in arrays of tiny donut magnetic cores were introduced by GE and Univac in 1959.

The invention of the transistor by Bardeen, Brattain and Schockley at Bell Labs 1n 1948 revolutionized the computer industry. The bulk and huge power consumption of vacuum tubes (as well as the heat generated by them) gave way to small cabinets. The first totally solid state computers with RAM in arrays of tiny donut magnetic cores were introduced by GE and Univac in 1959.

Later, Transistor memory chips replaced large magnetic core arrays. Slow and costly tape drives were replaced by magnetic disc drives, drastically reducing cost, size and power requirements, at the same time increasing speed and capability.

During the 1950s and 1960s, the number of companies producing digital main frame computers increased - Honeywell, Burroughs, Computor Data Corporation (CDC),RCA, GE, ICL, Fujitsu, Siemens, Olivetti. Then mergers thinned the ranks out. By the 21st century, only a couple remained,

super-computers their main product.

In 1968, two physicists, Gordon Moore and Robert Noyce, formed a new company, INTEL, to build integrated semiconductor circuits. Within three years they had created four chips that enabled a whole new generation of computers. These chips were SRAM, DRAM and ROM, followed in 1971 by the first CPU on a chip, the 4004, designed by Federico Faggin and Ted Hoff. A single Intel 4004 chip, 3x4mm in size, contained 2,300 transistors and had greater computing power than the 13 ton ENIAC!

In 1968, two physicists, Gordon Moore and Robert Noyce, formed a new company, INTEL, to build integrated semiconductor circuits. Within three years they had created four chips that enabled a whole new generation of computers. These chips were SRAM, DRAM and ROM, followed in 1971 by the first CPU on a chip, the 4004, designed by Federico Faggin and Ted Hoff. A single Intel 4004 chip, 3x4mm in size, contained 2,300 transistors and had greater computing power than the 13 ton ENIAC!

Soon the term "minicomputer" came to mean a machine that lies in the middle range of the computing spectrum, in between the smallest mainframe computers and the microcomputers that appeared later. The minicomputer brought the power of digital computing and date processing to smaller companies and institutions like libraries and museums that could not afford main-frame computers. So called "dumb" terminals allowed numerous offices and sections within manufacturing plants to input date, and obtain information from, the central mini-computer.

Numerous new companies sprang up to develop and manufacture chips, computers and terminals. Among them DEC (Digital Equipment Corporation), Data General, Wang, Compaq, Hewlett Packard, Apollo, Norsk Data, NCR, Nixdorf, Olivetti. Most of them disappeared as micro-computers grew in capability in the 1990s and early 2000s.

The advent of the microprocessor in 1971 and solid-state memory made home computing affordable. Early hobby microcomputer systems such as the Altair 8800 and Apple I introduced around 1975 marked the release of low-cost 8-bit processor chips, which had sufficient computing power to be of interest to experimental users. They were sold as kits, the buyers had to assemble them and program them, they had no Operating Systems.

The advent of the microprocessor in 1971 and solid-state memory made home computing affordable. Early hobby microcomputer systems such as the Altair 8800 and Apple I introduced around 1975 marked the release of low-cost 8-bit processor chips, which had sufficient computing power to be of interest to experimental users. They were sold as kits, the buyers had to assemble them and program them, they had no Operating Systems.

By 1977 pre-assembled systems such as the Apple II, Commodore PET, and TRS-80 were being sold in quantity.

Intel then released their 8086/8088 family of microprocessor chips. IBM introduced the IBM-PC with the PC-DOS operating system, which was quickly adopted by small business and enthusiasts. However, one had to remember a series of commands that were typed on the keyboard. The output was text on a monocolor screen, or a standard type-face printer. Quickly applications such as WordStar word processing and VisiCalc for data processing made the IBM PC a world-wide hit. The PC was heavily cloned, leading to mass production and consequent cost reduction throughout the 1980s.

The use of typed commands inhibited the broad acceptance of personal computers by the general public. But a revolution was on its way. In 1973, Xerox PARC (Palo Alto Research Center) developed a personal computer (called ALTO) which was never sold commercially. It had a bitmapped screen, and was the first computer to include a graphical user interface (GUI). At PARC these ideas were incorporated into XEROX STAR work stations which were interconnected using Ethernet. Because of high price Star was not a commercial success, and Xerox failed to realize the broad demand for the GUI technology, did not even patent it! Steve Jobs saw a demonstration of Star and immediately obtained a license in exchange for shares in his company, Apple.

Apple used the technology for the personal computer Lisa in 1983, subsequently for the Macintosh, which quickly became the the computer of choice for the media industry, architects and artists.

Apple used the technology for the personal computer Lisa in 1983, subsequently for the Macintosh, which quickly became the the computer of choice for the media industry, architects and artists.

Shortly afterwards Bill Gates coupled the GUI technology with PC-DOS, which he was developing under contract to IBM, thus starting the long series of Windows Operating Systems (OS) and applications. Now that the use of a personal computer became easy for everyone, the market exploded.

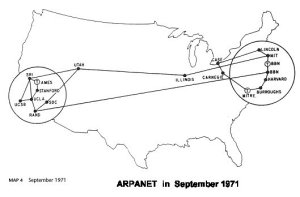

Without the WWW the explosive development of personal computing and internet commercial activities would not have been possible. It grew out of the ARPANET created as a result of a decision made at the 1967 meeting of all participants in the Advanced Research Projects Agency to facilitate the exchange of information between all their computers which were using different operating systems and data protocols. At the end of 1967 ARPA initiated a small contract with the Stanford Research Institute for the development of specifications for the necessary communications system.

University of Califrnia Los Angeles and Stanford Research Institute were assigned the first tasks, but information on progress was given to all participants and contributions encouraged: "...it was a community of network researchers who believed strongly that collaboration is more powerful than competition among researchers...."  SRI issued a report by the end of 1967 which determined that dedicated minicomputers (called IMP - Interface Message Processors) should be provided to each site, so that individual computers only had to worry about communicating with a single standard IMP (what today we call a Router). The consultants Bolt, Beranek and Newman of Boston were assigned the task of designing the IMPs and the method of passing data between them over telephone lines, using packet switching. This task was completed on time and the first IMPs were delivered to UCLA, Stanford, UC Santa Barbara and Un. of Utah in the fall of 1969 and communications established between those four sites. By 1983, when the military computers were split off into MILNET, there were over 300 host computers attached to ARPANET, in US and Europe.

SRI issued a report by the end of 1967 which determined that dedicated minicomputers (called IMP - Interface Message Processors) should be provided to each site, so that individual computers only had to worry about communicating with a single standard IMP (what today we call a Router). The consultants Bolt, Beranek and Newman of Boston were assigned the task of designing the IMPs and the method of passing data between them over telephone lines, using packet switching. This task was completed on time and the first IMPs were delivered to UCLA, Stanford, UC Santa Barbara and Un. of Utah in the fall of 1969 and communications established between those four sites. By 1983, when the military computers were split off into MILNET, there were over 300 host computers attached to ARPANET, in US and Europe.

In the meantime other networks had been established, such as X.25 (developed by the International Telecommunication Union) and Compuserve. They all used packet switching but with different protocols, thus making it impossible for networks to communicate with each other.

To solve this problem, Robert Kahn of ARPA and Vinton Cerf, Stanford University, created a new standard called the Internet Transmission Control Program and published the draft specification in December 1974. ARPA funded the development of the software and in 1981 the "TCP/IP" protocol was adopted. The universal Internet was born.

So far all information exchanges had been text only. It was necessary to determine a standard format for transmission of graphics, photographs, data tables, etc. The European Council for Nuclear Research (CERN) in Switzerland created an information management system, in which text could contain links and references to other works, allowing the reader to quickly jump from document to document. They created a server for publishing this style of document (called hypertext) as well as a program for reading them, called WorldWideWeb. The source code for this system was released into the public domain in 1993.

In 1994, Tim Berners-Lee, a British scientist working for CERN, founded the World Wide Web Consortium (W3C) with support from CERN, DARPA, the European Commission. and many manufacturers. The intent was to standardize the protocols and technologies used to build the web. Although not compulsory, most manufacturers, organizations, authors of web pages, wish to label their products as W3C-compliant. The final holdout was Microsoft who now has changed their latest products to be largely compliant. This web page (and all pages at this web site) is W3-C compliant, written in Open Source Software, and resides on a server that is accessible in seconds from anywhere in the world. All thanks to 70 years of development work by dedicated scientists and the World Wide Web!

Acronyms used in the computer literature:

ARPA - Advanced Research Projects Agency (supported by the US Dept of Defense)

CPU - Central Processing Unit

DOS - Digital Operating System

ENIAC - Electronic Numerical Integrator And Computer

EDSAC - Electronic Delay Storage Automatic Calculator

GUI - Graphical User Interface

KB - KiloByte=1024 Bytes (1 Byte=8 bits); MB - MegaByte=1024KB; GB - GigaByte=1024MB,

RAM - Random Access Memory (DRAM - Dynamic RAM, SRAM - Static RAM)

UNIVAC - Universal Automatic Computer

Return to top of page.

Return to Home page.

Have a question or comment? Write to Syrena. He will answer as quickly as possible.

NOTE: All illustrations courtesy of Wikipedia.org.

History of computing hardware at Wikipedia.

History of GUI

(graphical user interface).

Free and open source software,

The birth of digital computing, women were there!

Quick Guide to CSS, Cascading Style Sheets.